Finer Change Detection with Custom Benchmark Thresholds

Different benchmarks have different performance characteristics. For example, benchmarks with I/O operations or multi-processing can vary more between runs. These kind of variability is inherent to the benchmark, no matter how we measure it. A single global regression threshold treats all benchmarks the same, which means either accepting false positives from variable benchmarks or missing real regressions in stable ones.

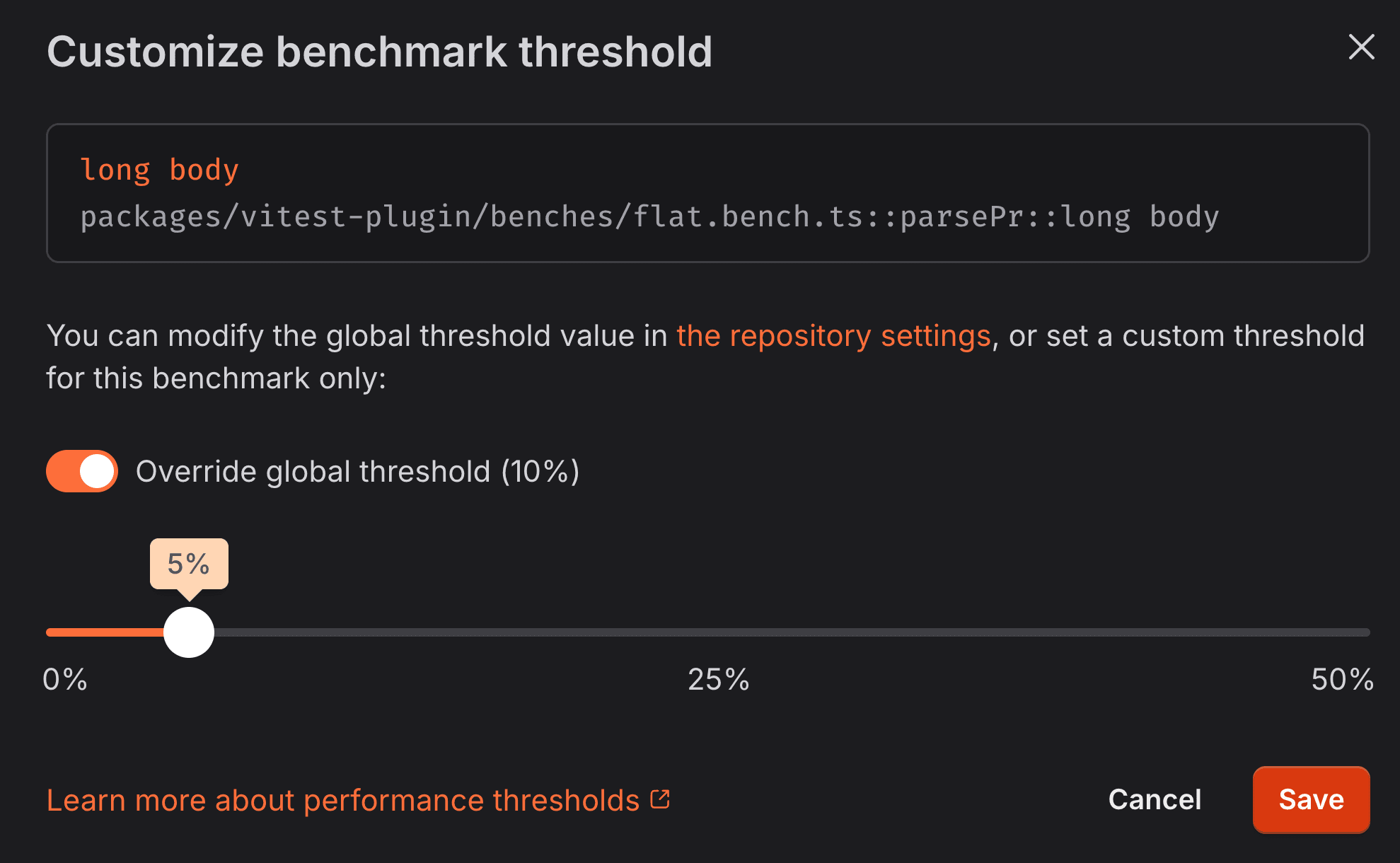

Set a custom threshold between 0% and 50% for any benchmark

You can now set custom regression thresholds for individual benchmarks. Be strict for stable microbenchmarks using the CPU simulation instrument, and more lenient for macro-benchmarks with the walltime instrument that may have more inherent variability.

Each benchmark gets the right threshold for its characteristics, helping you catch real performance issues without false positives.

Learn more

Check out our documentation to learn more about: