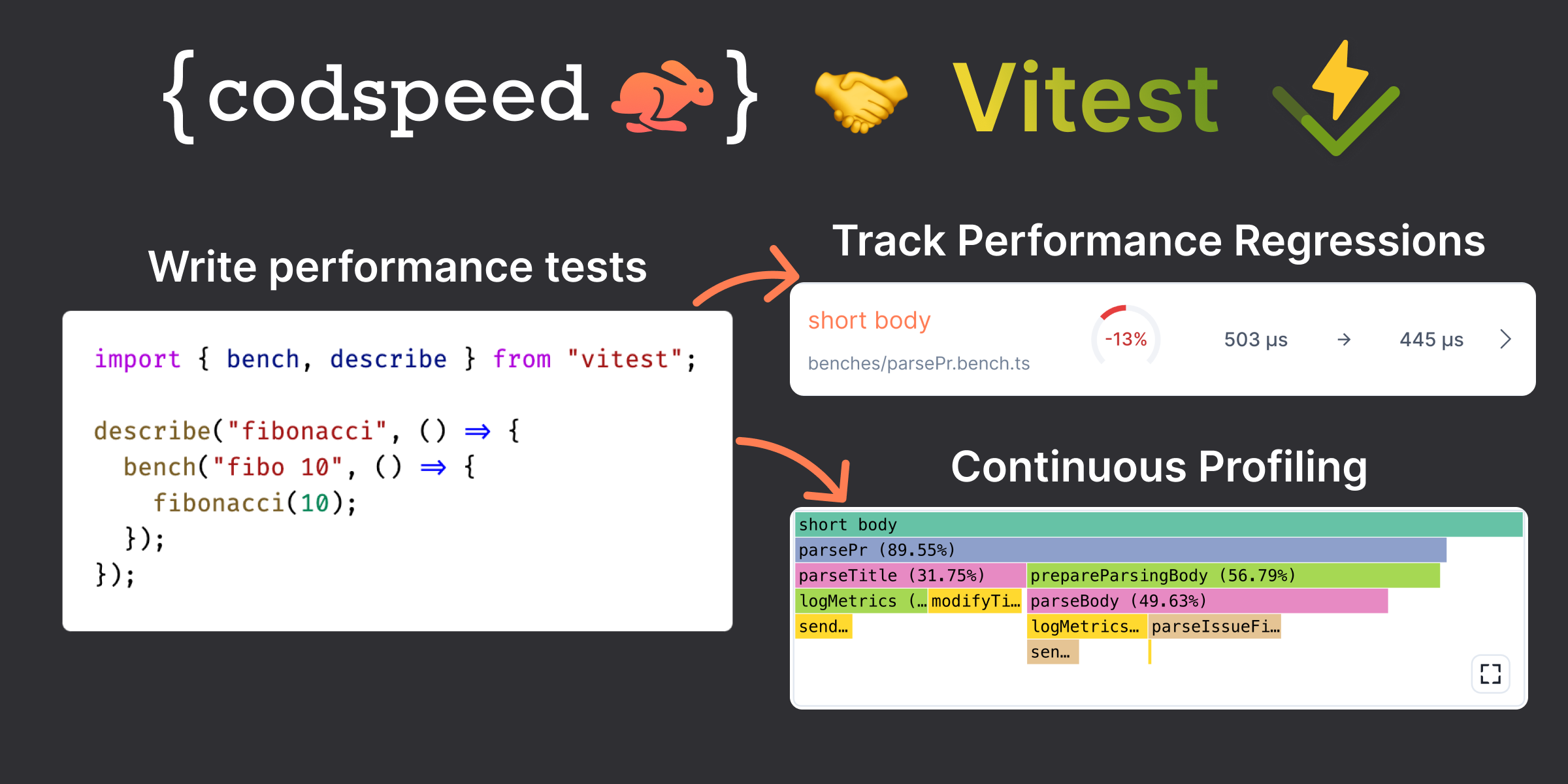

Using Vitest bench to track performance regressions in your CI

One of the easiest ways to track performance regressions in your JavaScript

applications is to use Vitest's bench API. It lets you write benchmarks as

easily as unit tests:

import { bench, describe, test } from "vitest";

describe("fibonacci", () => {

test("should return 55 when called with 10", () => {

expect(fibonacci(10)).toBe(55);

});

bench("fibo 10", () => {

fibonacci(10);

});

});Then you can run the benchmarks with vitest bench:

pnpm vitest benchThe problem that we now face is that the results are inconsistent between runs. Running the command multiple times in a CI/CD runner or on your machine will yield different results. Thus it will be hard to spot performance regressions with confidence.

This is where using CodSpeed comes in handy. CodSpeed provides a way to run benchmarks in a consistent manner, and thus detect performance regressions with confidence.

We are excited to announce that CodSpeed now has first-class support for Vitest!

How to use Vitest with CodSpeed

Install the @codspeed/vitest-plugin package and vitest as dev dependencies:

pnpm add -D @codspeed/vitest-plugin vitestThe CodSpeed plugin is only compatible with vitest@1.0.0 and above.

Create or update the vitest.config.ts file at the root of your project:

import codspeedPlugin from "@codspeed/vitest-plugin";

import { defineConfig } from "vitest/config";

export default defineConfig({

plugins: [codspeedPlugin()],

// ...

});If you are on a project using vite and vitest, you can also add the plugin

to the vite.config.ts file.

Let's create a fibonacci function and create some benchmarks on it. Create a new

file src/fibonacci.ts:

export function fibonacci(n: number): number {

if (n <= 1) return n;

return fibonacci(n - 1) + fibonacci(n - 2);

}Create a new file src/fibonacci.bench.ts:

import { bench, describe } from "vitest";

import { fibonacci } from "./fibonacci";

describe("fibonacci", () => {

bench("fibo 10", () => {

fibonacci(10);

});

bench("fibo 15", () => {

fibonacci(15);

});

});Now we can run the benchmarks with vitest bench:

$ vitest bench

[CodSpeed] bench detected but no instrumentation found, falling back to default vitest runner

RUN v1.0.3 ...

✓ src/fibonacci.bench.ts (2) 1521ms

✓ fibonacci (2) 1520ms

name hz min max mean p75 p99 p995 p999 rme samples

· fibo 10 1,760,687.42 0.0005 1.0279 0.0006 0.0006 0.0007 0.0007 0.0016 ±0.50% 880344 fastest

· fibo 15 173,932.15 0.0052 0.0594 0.0057 0.0059 0.0064 0.0070 0.0147 ±0.08% 86967

BENCH Summary

fibo 10 - src/fibonacci.bench.ts > fibonacci

10.12x faster than fibo 15We can see that CodSpeed has been detected and that the benchmarks have been

run. For the moment we can see the default output of Vitest's bench API.

Running the benchmarks in your CI

To generate performance reports, you need to run the benchmarks in your CI. This allows CodSpeed to detect the CI environment and properly configure the instrumented environment.

Enable the repository on CodSpeed

The first step is to log in to CodSpeed and import the repository you want to track. You can find more information about this in the CodSpeed documentation.

After installing the GitHub app and importing the repository in CodSpeed, you can proceed to the next step and create a benchmarks workflow.

Add a CodSpeed GitHub Actions workflow

Open a Pull Request on your repo with the previous changes, and the following

GitHub Actions workflow .github/workflows/codspeed.yml. It will run the

benchmarks and report the results to CodSpeed on every push to the main branch

and every pull request:

name: CodSpeed

on:

push:

branches:

- "main"

pull_request:

permissions: # optional for public repositories

contents: read # required for actions/checkout

id-token: write # required for OIDC authentication with CodSpeed

jobs:

benchmarks:

name: Run benchmarks

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: pnpm/action-setup@v2

- uses: actions/setup-node@v3

with:

cache: pnpm

- name: Install dependencies

run: pnpm install

- name: Run benchmarks

uses: CodSpeedHQ/action@v3

with:

run: pnpm vitest benchIf everything went well, the workflow should run and after it finishes you should see a comment from the CodSpeed GitHub app on the Pull Request with the run report.

Visualizing the benchmarks on the CodSpeed app

By clicking on the CodSpeed comment in the Pull Request, you will be redirected

to the CodSpeed report of the PR. You will see a list of the benchmarks, and

when clicking on the fibo 10 benchmark its flame graph will appear:

Flame Graphs component taken from the CodSpeed app. It is fully interactive, don't hesitate to play with the buttons!

And that's it for the installation of CodSpeed with Vitest, now we can explore a bit more what CodSpeed offers.

Visualizing a performance change

One of the main features of CodSpeed is to detect and visualize the performance changes of a pull request.

Let's now say that we are on a more complex project where we have a parsePr

function that parses a GitHub pull request. We created a pull request with some

performance improvements, and the following is in the CodSpeed report:

The Diff graph shows the resulting graph of the difference between the head and base runs, allowing us to pinpoint where the performance changes are inside the code

We observe a 25% improvement in the overall benchmark, driven by enhancements in

the parsePr and parseIssueFixed functions. Although the logMetrics

function has experienced regression, its impact on the overall benchmark is less

pronounced compared to the observed improvements. Additionally, a new function,

modifyTitle, is introduced and denoted by the distinctive blue color.

Thanks to the differential flame graph, it is now really easy to understand at a glance what code changes impact the performance.

Wrapping up

And there you have it—CodSpeed now supports Vitest to make performance testing a breeze for your JavaScript and TypeScript projects! With an easy-to-use bench API and the magic touch of CodSpeed's consistency, tracking regressions is as simple as adding a unit test.

Get ready to supercharge your CI with performance reports, flame graphs, and more!

If you enjoyed this article, you can follow us on Twitter to get notified when we publish new articles and product updates.

Resources

- Vitest integration in the CodSpeed documentation

- @codspeed/vitest-plugin on GitHub

- CPU Simulation in the CodSpeed documentation

- Vitest bench API