CPython 3.13 was released two weeks ago and this release is the most performance-oriented in some time. After a quick read of the release notes, a few things stand out for the impact they can have on the performance:

- CPython can now run in free-threaded mode, with the global interpreter lock (GIL) disabled

- a brand new just-in-time (JIT) compiler has been added

- CPython now bundles the

mimallocallocator out of the box

Let's focus on the free-threaded mode in this article to see how to leverage this change and how it can impact the performance of Python applications by measuring performance with CodSpeed.

⏭️ The JIT and

mimallocperformance will be covered in the next post. Stay tuned!

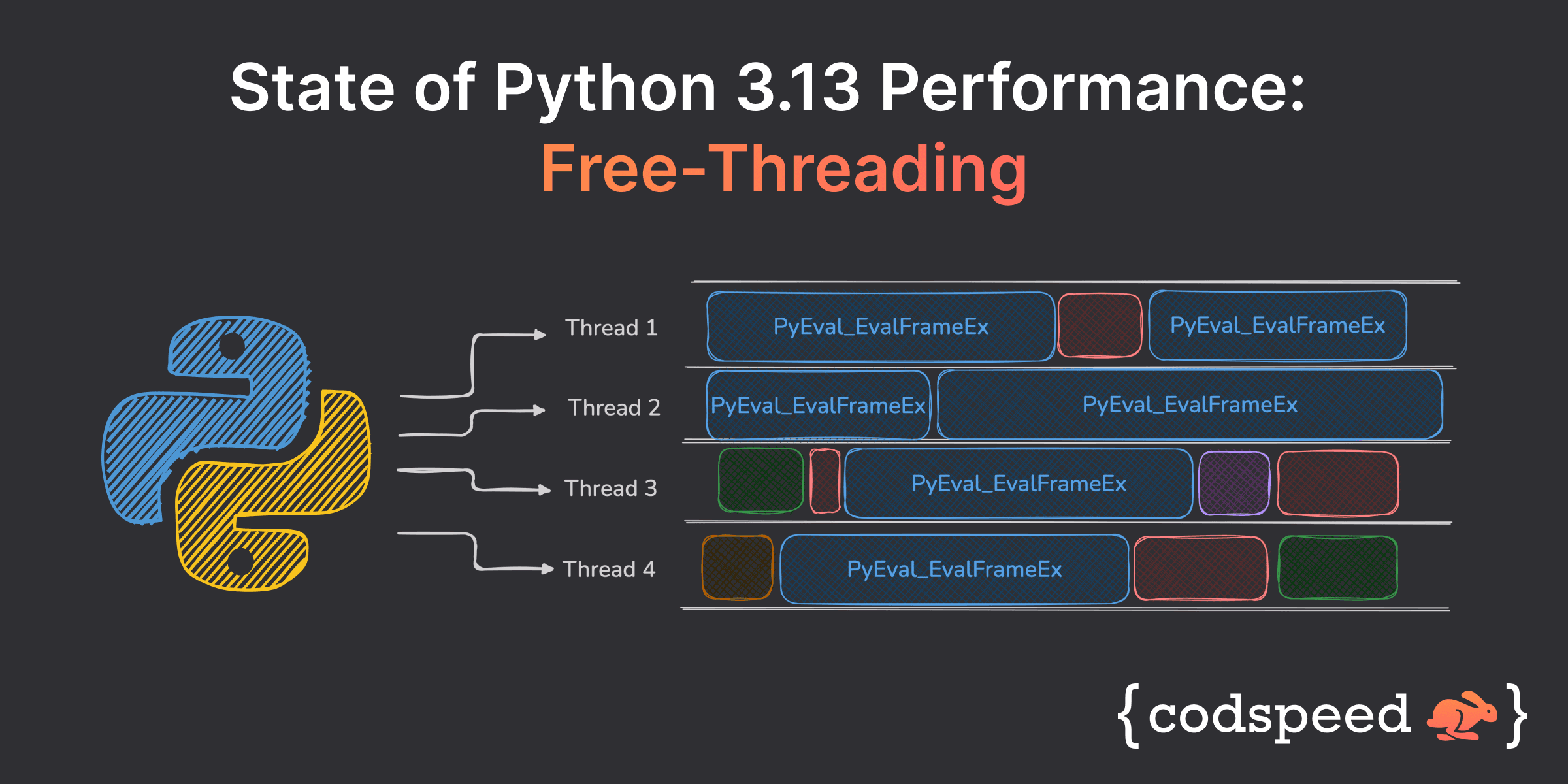

Free-threaded CPython

Free-threading is an experimental feature in Python 3.13 that allows CPython to run without the Global Interpreter Lock (GIL). The GIL is a mutex preventing multiple threads from executing Python bytecode simultaneously. This design choice has simplified CPython's memory management and made the C API easier to work with. However, it has also been one of the most significant barriers to utilizing modern multi-core processors effectively.

The Multiprocessing Workaround

The traditional solution has been to use the multiprocessing module, which

spawns separate Python processes instead of threads and while this approach

works, it comes with significant limitations:

-

Memory Overhead: Each process requires its own Python interpreter instance and memory space. For data-intensive applications, this can quickly become a bottleneck.

-

Communication Cost: Processes can't share memory directly. Data must be serialized and deserialized when passed between processes, which adds overhead and complexity.

-

Startup Time: Creating new processes is significantly slower than creating threads, making it impractical for tasks that require frequent spawning of workers.

Real-World Impact: The PageRank Example

To illustrate these limitations, let's consider implementing PageRank, the algorithm that powered Google's early search engine. PageRank is an ideal example because it:

- Is compute-intensive (matrix operations)

- Works with large datasets (the web graph)

- Can benefit significantly from parallelization

A naive multithreaded implementation in Python 3.12 or earlier would be bottlenecked by the GIL during matrix operations, while a multiprocessing version would struggle with:

- The memory overhead of copying the graph to each process

- The cost of transferring partial results between processes

- The complexity of managing shared state

Before we proceed, it's important to clarify that our focus here isn't on the specifics of the PageRank algorithm itself but rather on the parallelization so we won't go into the details of the algorithm itself here.

Let's look at how we would implement this with those different concurrency models.

Textbook Implementation (Single-Threaded)

def pagerank_single(matrix: np.ndarray, num_iterations: int) -> np.ndarray:

"""Single-threaded PageRank implementation"""

size = matrix.shape[0]

# Initialize scores

scores = np.ones(size) / size

for _ in range(num_iterations):

new_scores = np.zeros(size)

for i in range(size):

# Get nodes that point to current node

incoming = np.where(matrix[:, i])[0]

for j in incoming:

# Add score contribution from incoming node

new_scores[i] += scores[j] / np.sum(matrix[j])

# Apply damping factor

scores = (1 - DAMPING) / size + DAMPING * new_scores

return scoresThe highlighted lines show the two most computationally intensive parts of the algorithm. The first computes the score contribution from incoming nodes, while the second applies the damping factor, incorporating the new scores into the final result.

Parallelizing the first part will be the most beneficial and easy to implement

since we can divide the range and thus efficiently use multiple threads to

compute the new_scores array.

Multithreaded Implementation

For the multithreaded implementation, we'll start by dividing the matrix into multiple chunks:

chunk_size = size // num_threads

chunks = [(i, min(i + chunk_size, size)) for i in range(0, size, chunk_size)]Each thread will then work on a different chunk of the matrix, updating the new scores:

def _thread_worker(

matrix: np.ndarray,

scores: np.ndarray,

new_scores: np.ndarray,

start_idx: int,

end_idx: int,

lock: threading.Lock,

):

size = matrix.shape[0]

local_scores = np.zeros(size)

for i in range(start_idx, end_idx):

incoming = np.where(matrix[:, i])[0]

for j in incoming:

local_scores[i] += scores[j] / np.sum(matrix[j])

with lock:

new_scores += local_scores It's important to note that updating the new_scores array is done behind a

lock to prevent race conditions. This could potentially become a bottleneck if

the lock is held for too long, but in practice, parallelizing the first part of

the algorithm should provide a significant speedup already.

Finally, we'll feed the chunks to each of the threads:

new_scores = np.zeros(size)

lock = threading.Lock()

with concurrent.futures.ThreadPoolExecutor(max_workers=num_threads) as executor:

# Process chunks in parallel #

futures = executor.map(

lambda args: _thread_worker(*args), # starmap isn't available on ThreadPoolExecutor #

[

(matrix, scores, new_scores, start_idx, end_idx, lock)

for start_idx, end_idx in chunks

],

)

new_scores = (1 - DAMPING) / size + DAMPING * new_scores

scores = new_scoresMultiprocessing Implementation

Essentially, the multiprocessing implementation is extremely similar to the

threading one, let's focus on the differences:

-

Each worker, will now return the

local_scoresarray instead of updating a sharednew_scoresarray since processes can't share memory directly. The local scores will then be aggregated in the main process:# Combine results new_scores = sum(chunk_results)While this should be faster than the threading version, it still incurs the overhead of the inter-process communication which can become very significant for large datasets.

-

Instead of using a

ThreadPoolExecutor, it will use amultiprocessing.Pool. The APIs are very similar, butmultiprocessing.Poolwill spawn a pool of processes instead of threads:with multiprocessing.Pool(processes=num_processes) as pool: # Process chunks in parallel # chunk_results = pool.starmap(_process_chunk, chunks) # Combine results new_scores = sum(chunk_results) new_scores = (1 - DAMPING) / size + DAMPING * new_scores scores = new_scores

Measuring Performance

In order to measure the actual performance changes, let's build a performance test. First things first, we need to generate some testing data:

def create_test_graph(size: int) -> np.ndarray:

# Fixed seed #

np.random.seed(0)

# Create random adjacency matrix with ~5 outgoing edges per node

matrix = np.random.choice([0, 1], size=(size, size), p=[1 - 5/size, 5/size])

# Find nodes with no outgoing edges

zero_outdegree = ~matrix.any(axis=1)

zero_indices = np.where(zero_outdegree)[0]

# For each node with no outgoing edges, add a random edge

if len(zero_indices) > 0:

random_targets = np.random.randint(0, size, size=len(zero_indices))

matrix[zero_indices, random_targets] = 1

return matrixAs highlighted, we're using a fixed seed to ensure reproducibility from one run to the next. This is important when comparing the performance of different implementations. Here we're building some fake connections between pages to build a realistic graph but the mathematical operations would be exactly the same with an empty matrix as long as the size is the same.

Next, we'll use

pytest-codspeed, a pytest

plugin to measure the performance of the different implementations with various

parameter and with multiple builds/versions of CPython.

First let's define the benchmark cases:

@pytest.mark.parametrize(

"pagerank",

[

pagerank_single,

partial(pagerank_multiprocess, num_processes=8),

partial(pagerank_multithread, num_threads=8),

],

ids=["single", "8-processes", "8-threads"],

)

@pytest.mark.parametrize(

"graph",

[

create_test_graph(100),

create_test_graph(1000),

create_test_graph(2000),

],

ids=["XS", "L", "XL"],

)

def test_pagerank(

benchmark: BenchmarkFixture,

pagerank: PagerankFunc,

graph: np.ndarray,

):

benchmark(pagerank, graph, num_iterations=10)Here we're testing the 3 implementations with 3 different graph sizes. The

benchmark fixture is provided by pytest-codspeed and will measure the

execution time of the pagerank function with the given args.

Then, let's write a GitHub Actions workflow to measure the performance with various builds of CPython on CodSpeed's infrastructure:

on:

push:

jobs:

codspeed:

runs-on: codspeed-macro # requests a CodSpeed Macro runner for the jobs #

strategy:

matrix:

python-version: ["3.12", "3.13"]

include:

- { python-version: "3.13t", gil: "1" }

- { python-version: "3.13t", gil: "0" }

env:

UV_PYTHON: ${{ matrix.python-version }}

steps:

- uses: actions/checkout@v4

- name: Install uv

uses: astral-sh/setup-uv@v3

- name: Install CPython & dependencies

run: uv sync --all-extras

- name: Run benchmarks

uses: CodSpeedHQ/action@v3

env:

PYTHON_GIL: ${{ matrix.gil }}

with:

run: uv run pytest --codspeed --codspeed-max-time 10 -vs src/tests.pyHere we're running the benchmarks with Python 3.12, 3.13, and 3.13 with free

threading support (3.13t), both with and without the GIL. Running 3.13 both

with and without free-threading support will allow us to see its impact on the

performance even when the GIL is enabled.

The python builds used are pulled by uv directly from

python-build-standalone

built with GCC 6.3.0 20170516

The jobs will run on CodSpeed Macro runners, which are ARM64 bare-metal instances with 16 cores and 32GB of RAM, dedicated to each job.

Results

Time (in s) for the L graph size, less is better

- Without enabling new build options both 3.12 and

3.13 perform very similarly.

We also clearly see the limitation of the

multiprocessingimplementation being even slower than the single-threaded one due to the overhead of the inter-process communication.

- As expected the

threadingbased implementation is the fastest when running 3.13t with no GIL and the GIL is effectively not limiting the parallel execution of the threads anymore.

- Still, when running with the free threaded build both with and without the GIL, we see a significant slowdown for all other implementations. This is mostly because the free-threaded build requires the specializing adaptive interpreter to be disabled, thus clearly decreasing the performance of the other implementations. This overhead should be reduced in the 3.14 release where the specializing adaptive interpreter will be thread-safe and thus will be re-enabled. At that point, migrating to the free-threaded build should be a no-brainer for a lot of parallel applications and it will be interesting to measure the performance changes.

For all other graph sizes, the results are very similar and the conclusions are

the same. From this measurement, we can see that the new free-threaded build of

CPython 3.13 can have a significant impact on the performance of parallel

applications, bringing a very relevant alternative to multiprocessing. Still

it's experimental and not yet ready for production use because of the overall

slowdown it introduces, but it's a very promising step in the right direction!

Note

This benchmark doesn't include subinterpreters, which is another way to run

Python code parallelly without the GIL introduced in Python 3.12. Subintepreters

proved to be slower than other approaches in most cases, mostly because where

data sharing and inter-worker communication has not been fully solved yet. But

when it's there, it might definitely be a nice alternative to multiprocessing.

Resources

-

py-free-threading: a centralized collection of documentation and trackers around compatibility with free-threaded CPython

-

Docs on having the Specializing Adaptive Interpreter without the GIL