Why Rust doesn't need a standard div_rem: An LLVM tale

During the last advent of code, while starting with Rust, I discovered that the

Rust standard library was missing the usual div_mod(a.k.a. div_rem) function

that would simultaneously return the quotient and remainder. I got confused

since I already used the operator in C++ with

std::div or Python with

divmod. It seemed a

basic way of improving performance since both values would come out with the

same DIV assembly instruction, nicely avoiding extra CPU cycles.

Looking it up, I found an existing RFC created in 2017, but it hasn't received much attention since.

So, do we need a div_rem implementation in the standard library? I decided to

investigate.

A naive approach

Let's naively implement the function:

pub fn naive_div_rem(a: u32, b: u32) -> (u32, u32) {

(a / b, a % b)

}To dissect what is executed we're gonna analyze the compilation steps.

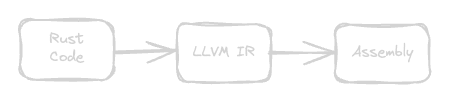

First, let's have a look at the LLVM Intermediate Representation(IR). If you're not familiar with it, is an extremely low-level programming language close to assembly but without being tied to specific hardware. It's extremely useful for compiler writers since it's a common language that can be used to target multiple architectures. It's also a great tool to understand what the compiler is doing and how it "interpret" our code.

For the previous code, here is the annotated LLVM IR we can obtain:

; Define a function called 'example::naive_div_rem

define { i32, i32 } @example::naive_div_rem(i32 %a, i32 %b) unnamed_addr {

start:

%_0 = alloca { i32, i32 }, align 4; Allocate memory for our return tuple

%_4 = icmp eq i32 %b, 0; Check if divisor %b is zero

br i1 %_4, label %panic, label %bb1; If %b is zero, panic, else continue

bb1:

%_3 = udiv i32 %a, %b; Perform unsigned division(only get the quotient)

%_6 = icmp eq i32 %b, 0; Check again for the divisor being zero (for the modulo)

br i1 %_6, label %panic1, label %bb2; If %b is zero, panic, else continue

bb2:

%_5 = urem i32 %a, %b; Compute the unsigned remainder

; ...

; Stores quotient and the remainder in a tuple, load them back,

; and constructs a return tuple with the values.

; ...

ret { i32, i32 } %8

; ... omitted panic handlers ...

}We can witness that this code contains redundant checks for the division and

modulo by 0. Also, we can note two operations to get our quotient and remainder:

udiv and urem. This is expected at this stage since LLVM doesn't have an

instruction combining them but hopefully, the compiler will be able to optimize

this while generating the assembly.

Let's have a look at the next compilation step so we can dive into the assembly

code generated from the IR(x86_64 with optimizations enabled):

example::naive_div_rem:

test esi, esi ; Test if esi (divisor) is zero

je .LBB0_2 ; Jump to panic block if esi is zero

mov eax, edi ; Move the dividend into eax

xor edx, edx ; Zero out edx to prepare for the division

div esi ; Unsigned division, quotient goes into eax, remainder into edx

ret

.LBB0_2:

; ... "attempt to divide by zero" panic handler ...And voila! We can see that the compiler was able to optimize our code and fused

the two operations into a single div instruction. So having a dedicated

implementation wouldn't be that helpful for performance while using rustc

since this optimization should almost always be done by the IR compiler.

You might have noticed quite a few things in the assembly code, let's have a look at them by creating our own assembly version.

An inline assembly version

use std::arch::asm;

pub fn asm_div_rem(a: u32, b: u32) -> (u32, u32) {

let mut tmp: u32 = a;

let mut remainder: u32 = 0;

unsafe {

asm!(

"div {divisor}",

inout("eax") tmp,

inout("edx") remainder,

divisor = in(reg) b,

options(pure, nomem, nostack),

);

}

(tmp, remainder)

}A few things to note in this implementation:

- The

divinstruction divides theeaxregister by its operand value. It will store the quotient ineaxand the remainder inedx. tmpholds the dividend before our assembly is executed. It will contain the quotient after. This is because its value is tied to theeaxregister and it will be used both for input and output thanks to theinoutoperand.remainderis also passed with theinoutoperand, allowing us to clear theedxregister before the operation is done sinceremainderis 0 before the assembly is executed. At the end, the register will hold the remainder value that we return in the output tuple.- The divisor is passed through any general purpose register as a simple input

value that we pass directly to the

divinstruction. - Finally, we pass a few flags that will help the compiler to know how our

inline assembly will behave with

options. Here: our block has no side effect, we're neither reading nor writing to the memory and we don't push anything to the stack.

Compiling this, we can see that it ends up a bit more concise than the previous compiled assembly we built:

example::asm_div_rem:

mov eax, edi ; Move the value of the dividend from EDI to EAX.

xor edx, edx ; Zero out EDX to prepare for the division.

div rsi ; Signed divide the dividend in EAX by the value in RSI('b').

ret ; Return from the function. quotient -> EAX, remainder -> EDX.It should be faster but we're cheating a bit on rust here since we're not taking into account the panic check that was done in the previous version.

It's faster! The overhead of those panic checks is not that dramatic in the end. Branch prediction is probably contributing a lot to this. It was eluded in the first IR snippets but in there, the rust compiler hints the rest of the pipeline that those checks are likely to succeed. The compiler's ability to optimize and hint that these checks are more likely to pass than fail can lead to surprisingly efficient code, even when it appears to be doing "much more" than the assembly version.

The cost of panic handling: an unchecked version

Now that we built our unchecked version in assembly, we can get quite close to

it by using unchecked div and rem from std::intrisics:

#![feature(core_intrinsics)]

pub unsafe fn unchecked_naive_div_rem(a: u32, b: u32) -> (u32, u32) {

let quotient = std::intrinsics::unchecked_div(a, b);

let remainder = std::intrinsics::unchecked_rem(a, b);

(quotient, remainder)

}And its intermediate representation:

; Define a function named 'example::unchecked_naive_div_rem' that takes two arguments

define { i32, i32 } @example::unchecked_naive_div_rem(i32 noundef %a, i32 noundef %b) unnamed_addr {

start:

%_0 = alloca { i32, i32 }, align 4; Allocate memory for a tuple to store the quotient and remainder

%quotient = udiv i32 %a, %b; Perform unsigned division of %a by %b

%remainder = urem i32 %a, %b; Compute the unsigned remainder of %a / %b

; ...

; Stores quotient and remainder in a tuple, loads them back, and constructs a return tuple with the values.

; ...

ret { i32, i32 } %1

}As you can see this IR doesn't contain anything about overflow checks and the performance should be close to or even better than our assembly version.

That's exactly the same time our assembly version takes. So let's have a look at the assembly:

example::unchecked_naive_div_rem:

mov eax, edi

xor edx, edx

div esi

retAnd it's the same as our assembly version! So bothering writing our assembly version is not really worth it in the end.

To conclude, there is no performance drawback of not having a single div_rem

function since the compiler optimizations will take care of this burden for us.

It might seem odd to write those two operations but unless very specific

optimization killer cases they will be fused as a single assembly instruction.

If you enjoyed this article, you can follow us on Twitter to get notified when we publish new articles.

Resources

- Compiler Explorer: an amazing tool to visualize the assembly and IR generated by the compiler

- Understanding LLVM IR

- The faker's guide to reading (x86) assembly

- Guide to x86 Assembly